News

[2026/02]🎉 TIGeR gets accepted to ICRA 2026! See you in Vienna, Austria!

[2025/12]🎉 Honored to be supported by China Association for Science and Technology Youth Ph.D Talent Support Project!

[2025/11]🎉 One paper Prune4Web is accepted by AAAI 2026! See you in Singapore!

[2025/09]🎉 RefSpatial-Bench is now used by Qwen3-VL and Gemini-Robotics-1.5 to evaluate spatial reasoning in complex scenes!

[2025/09]🎉 RoboRefer gets accepted to NeurIPS 2025! See you in San Diego, USA!

[2025/06]🎉 MineDreamer and

RH20T-P are both selected as Oral Presentation in IROS 2025!

See you in Hangzhou, China!

[2025/05]🎉 WorldSimBench gets accepted to ICML 2025 and selected as Oral Presentation in CVPR 2025 @ WorldModelBench!

[2025/02]🎉 Code-as-Monitor is accepted at CVPR 2025! Come on to see our demos!

[2024/12]🎉 AGFSync gets accepted to AAAI 2025! See you in Philadelphia, USA!

[2024/05]🎉 Honored to organize two workshop (TiFA, MFM-EAI) challenge

in ICML 2024!

[2024/02]🎉 MP5 is accepted at CVPR 2024! Please check out the demos

in our webpage!

Selected Publications ( *, †, ‡ indicates the equal contributions, corresponding author, project leader, respectively.)

Currently, my interest lies in Embodied Agents,

which are at the intersection of Multimodal Large Language Models and Embodied AI,

with particular interests in high-level planning and low-level control with spatio-temporal intelligence,

working towards an generalist agent in a complex real-world environment.

Representative works are highlighted.

|

|

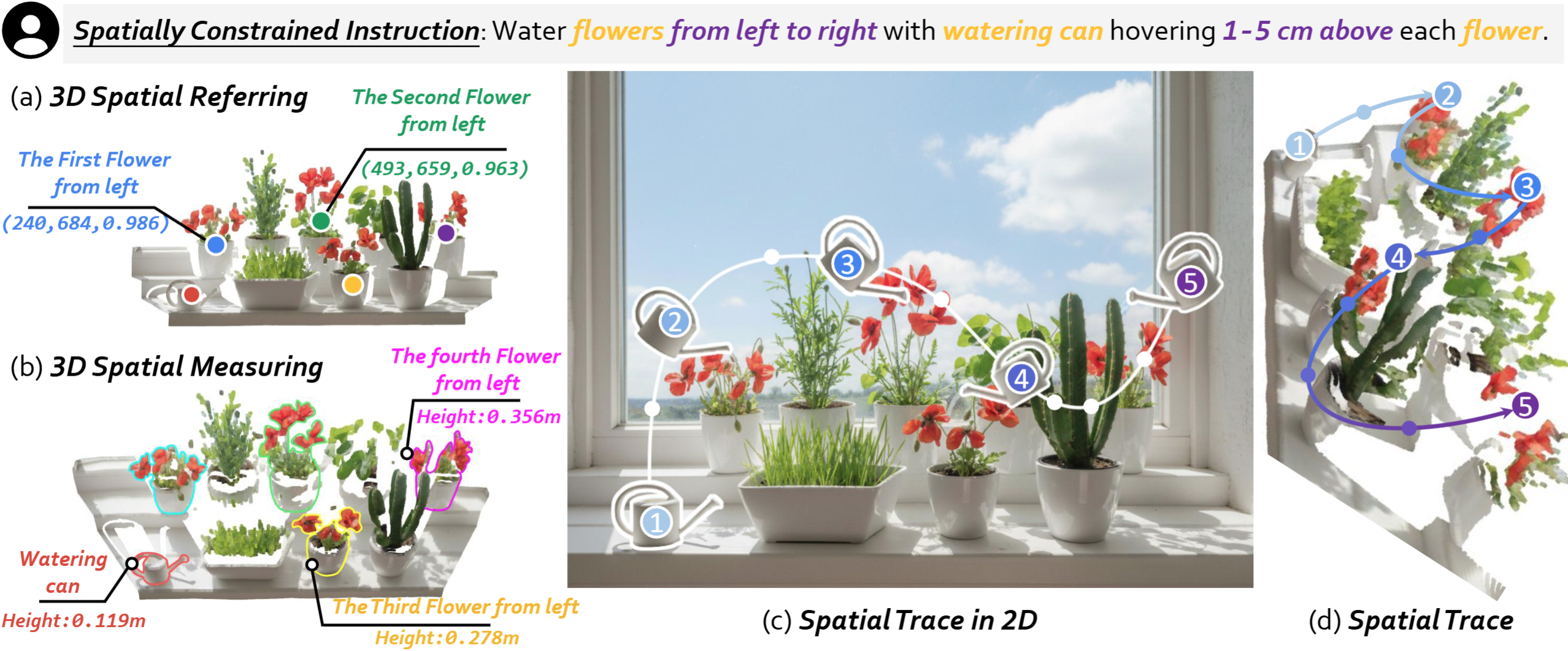

RoboTracer: Mastering Spatial Trace with Reasoning in Vision-Language Models for Robotics

Enshen Zhou *,

Cheng Chi *‡,

Yibo Li*;,

Jingkun An*,

Jiayuan Zhang,

Shanyu Rong,

Yi Han,

Yuheng Ji,

Mengzhen Liu,

Pengwei Wang,

Zhongyuan Wang,

Tiejun Huang,

Lu Sheng†,

Shanghang Zhang†

[Paper] /

[Project] /

[Code] /

[机器之心] /

[BibTeX]

Copy Success!

TL;DR: From what you say to where it moves using RoboTracer!

Arxiv 2025

|

|

|

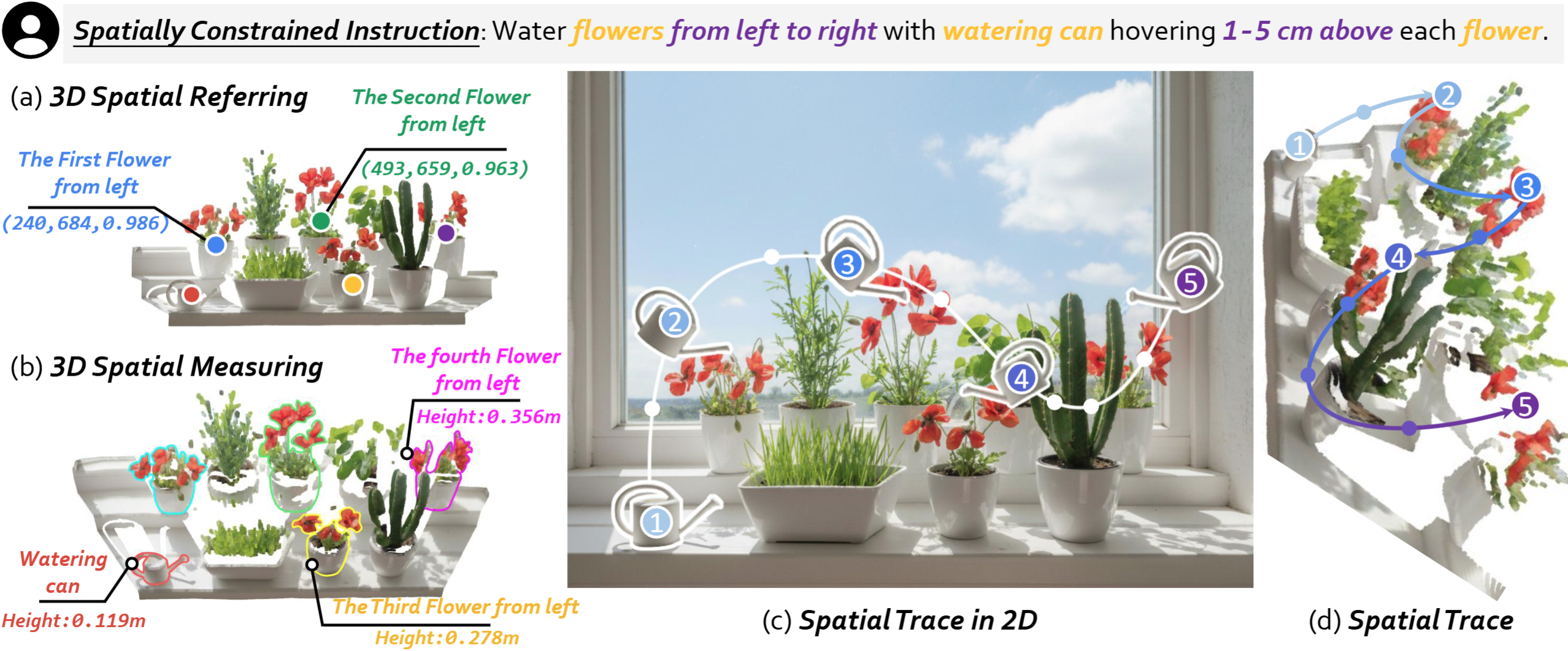

TIGeR: Tool-Integrated Geometric Reasoning in Vision-Language Models for Robotics

Yi Han *,

Cheng Chi *‡,

Enshen Zhou *,

Shanyu Rong,

Jingkun An,

Pengwei Wang,

Zhongyuan Wang,

Lu Sheng†,

Shanghang Zhang†

[Paper] /

[Project] /

[Code] /

[BibTeX]

Copy Success!

TL;DR: Equipping VLMs to perform accurate geometric reasoning for robotics!

ICRA 2026

|

|

|

RoboRefer: Towards Spatial Referring with Reasoning in Vision-Language Models for Robotics

Enshen Zhou *,

Jingkun An *,

Cheng Chi *‡,

Yi Han,

Shanyu Rong,

Chi Zhang,

Pengwei Wang,

Zhongyuan Wang,

Tiejun

Huang,

Lu Sheng†,

Shanghang Zhang†

[Paper] /

[Project] /

[Code] /

[机器之心] /

[BibTeX]

Copy Success!

TL;DR: From words to exactly where you mean using RoboRefer!

NeurIPS 2025

|

|

|

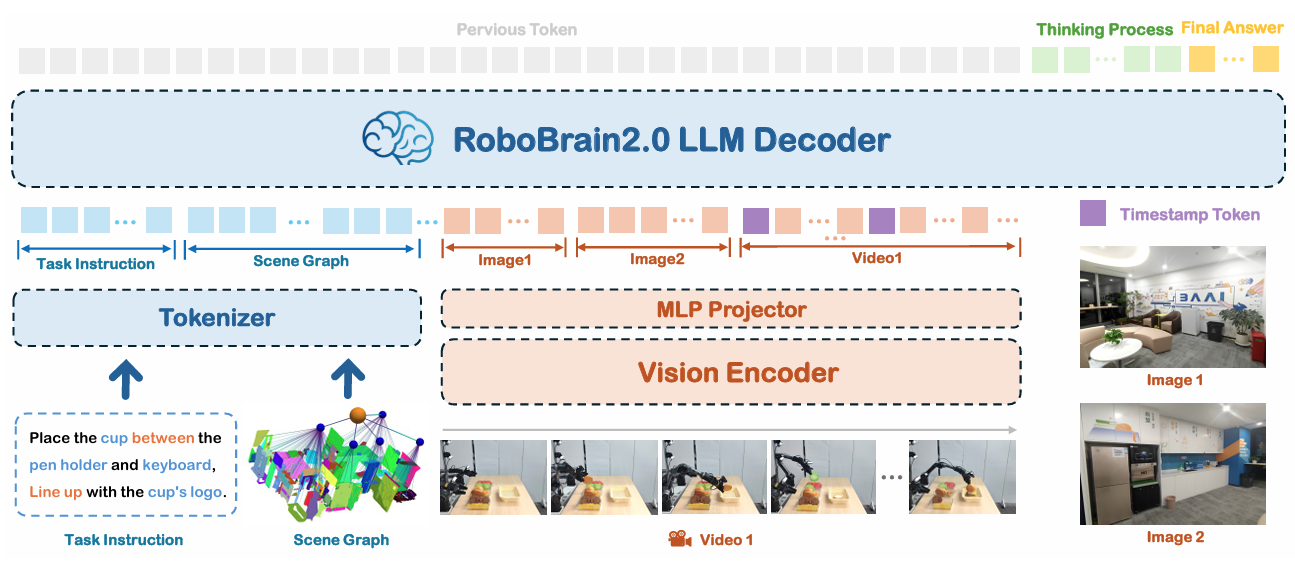

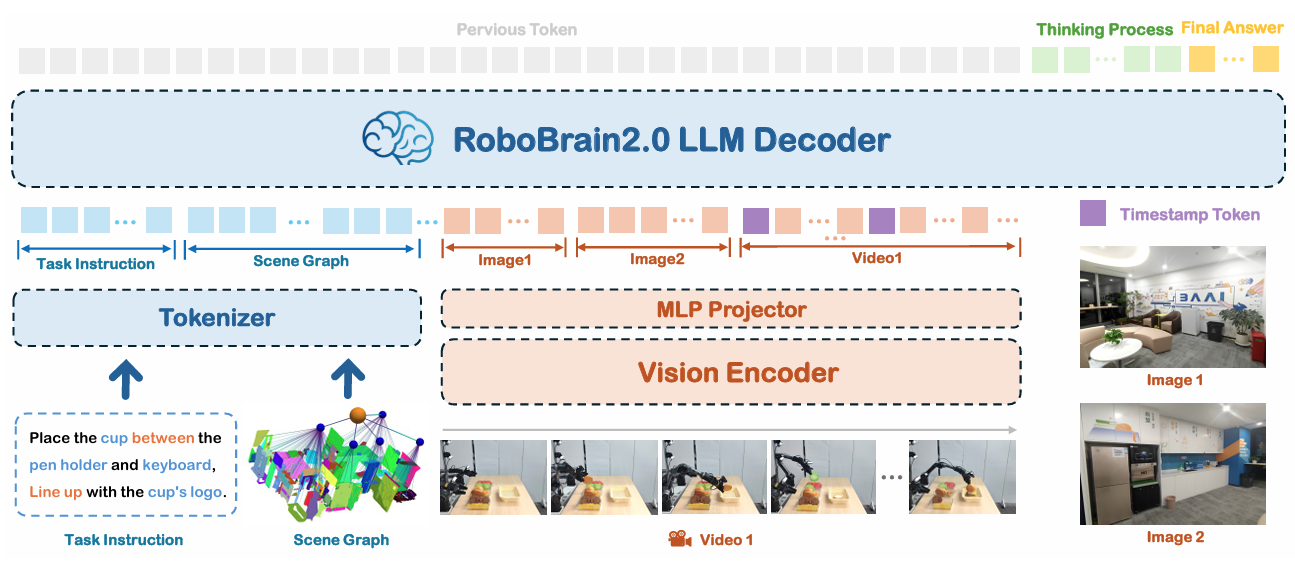

RoboBrain 2.0: See Better. Think Harder. Do Smarter.

BAAI RoboBrain Team (Enshen Zhou is the core dataset contributor)

[Paper] /

[Project] /

[Code] /

[BibTeX]

Copy Success!

TL;DR: The most powerful open-source embodied brain model to date!

Technical Report

|

|

|

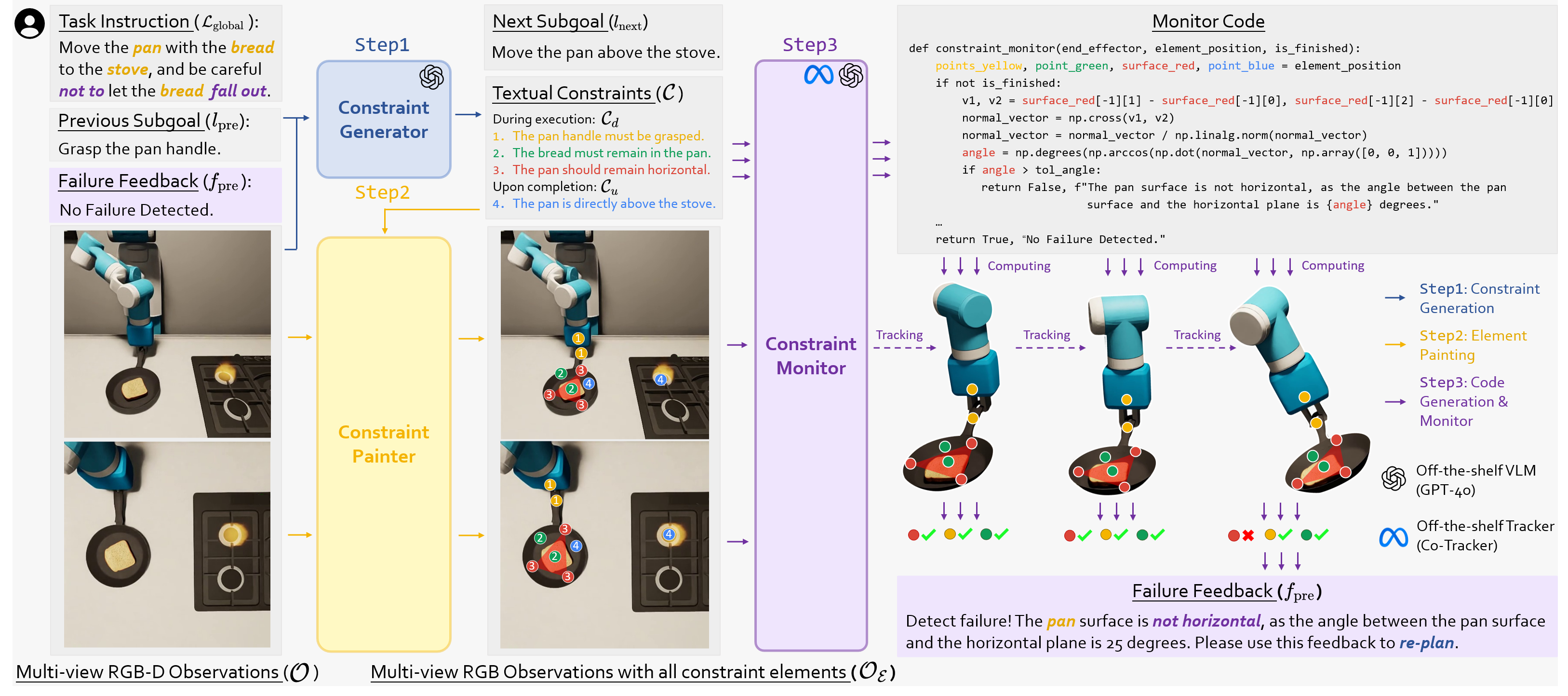

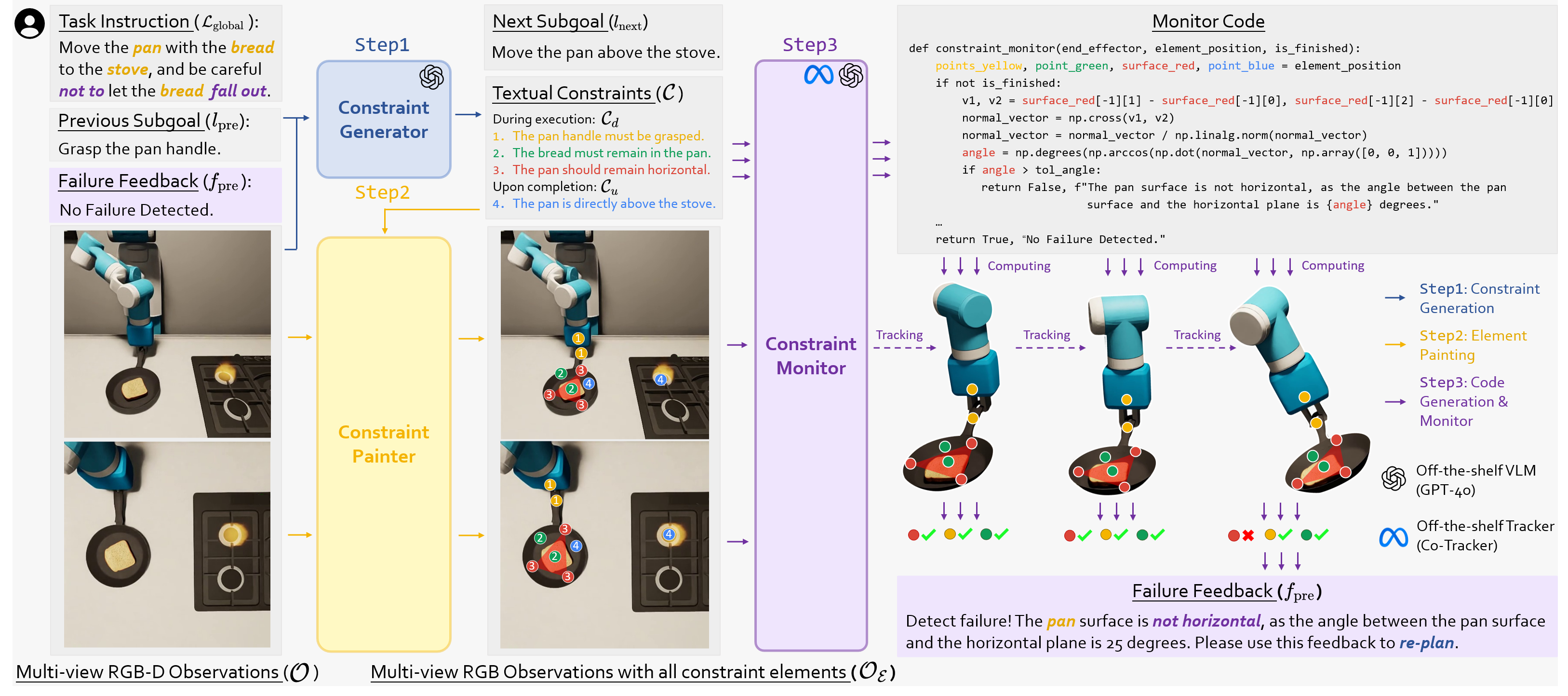

Code-as-Monitor: Constraint-aware Visual Programming for Reactive and Proactive Robotic Failure

Detection

Enshen Zhou *,

Qi Su *,

Cheng Chi *†,

Zhizheng

Zhang,

Zhongyuan

Wang,

Tiejun

Huang,

Lu Sheng†,

He Wang†

[Paper] /

[Project] /

[BiilBili Video] /

[BibTeX]

Copy Success!

TL;DR: Enjoy Open-world Failure Detection with Real-time high precision!

CVPR 2025

|

|

|

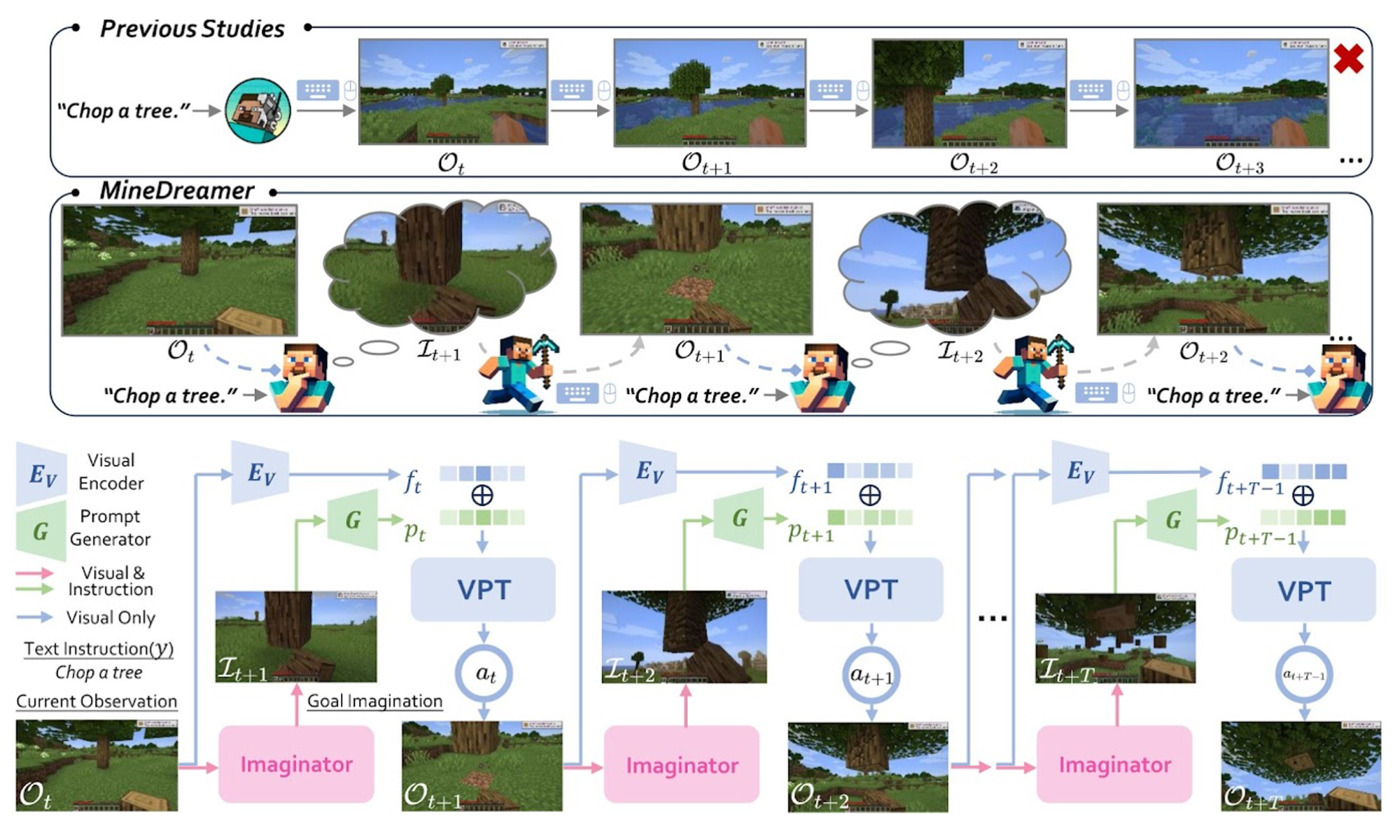

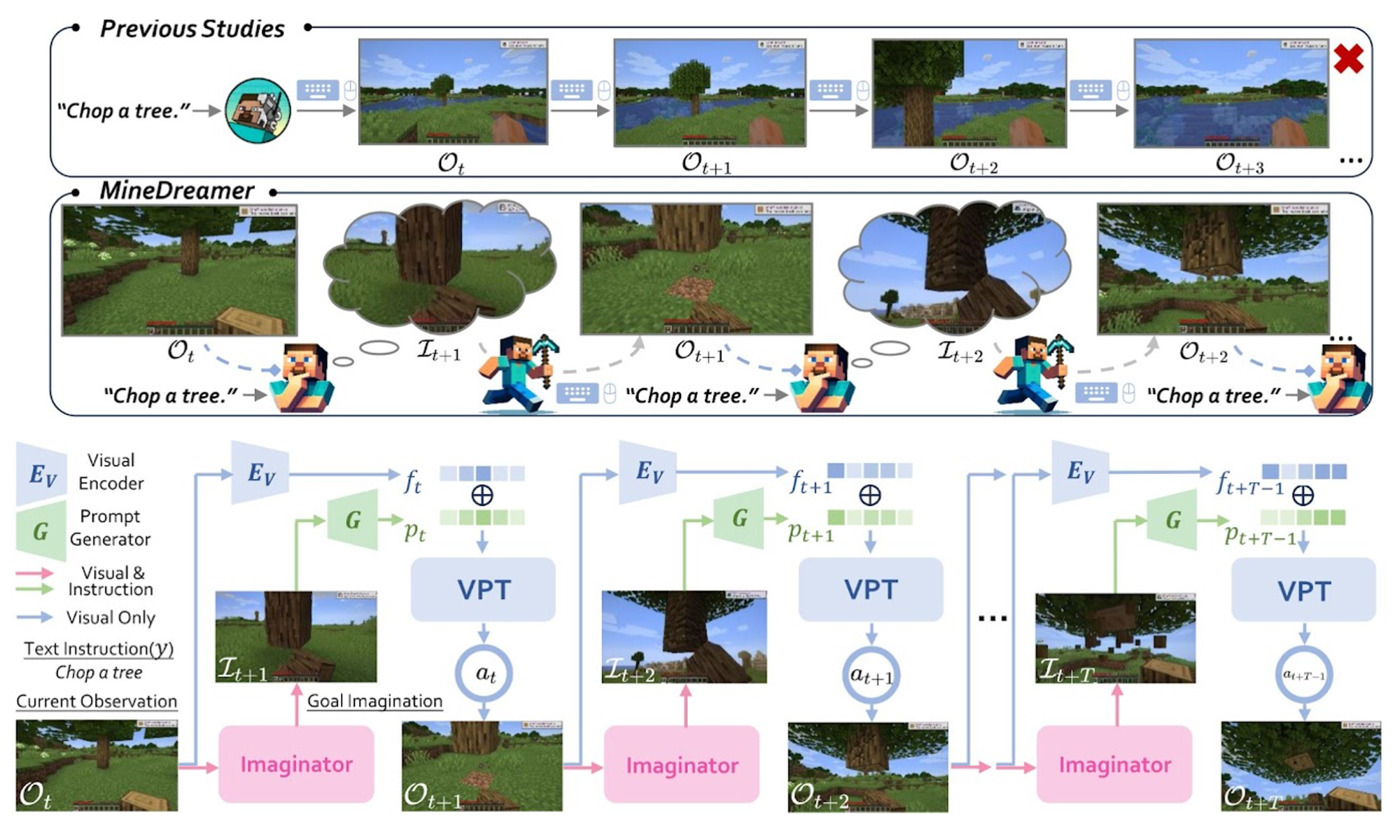

MineDreamer: Learning to Follow Instructions via Chain-of-Imagination for Simulated-World

Control

Enshen Zhou *,

Yinran Qin *,

Zhenfei Yin,

Yuzhou Huang,

Ruimao Zhang†,

Lu Sheng†,

Yu Qiao,

Jing Shao‡

[Paper] /

[Project] /

[Code] /

[BibTeX]

Copy Success!

TL;DR: Use Imagination to Guide agent itself How to Act step-by-step!

IROS 2025, Oral Presentation

NeurIPS 2024 @ OWA

|

|

|

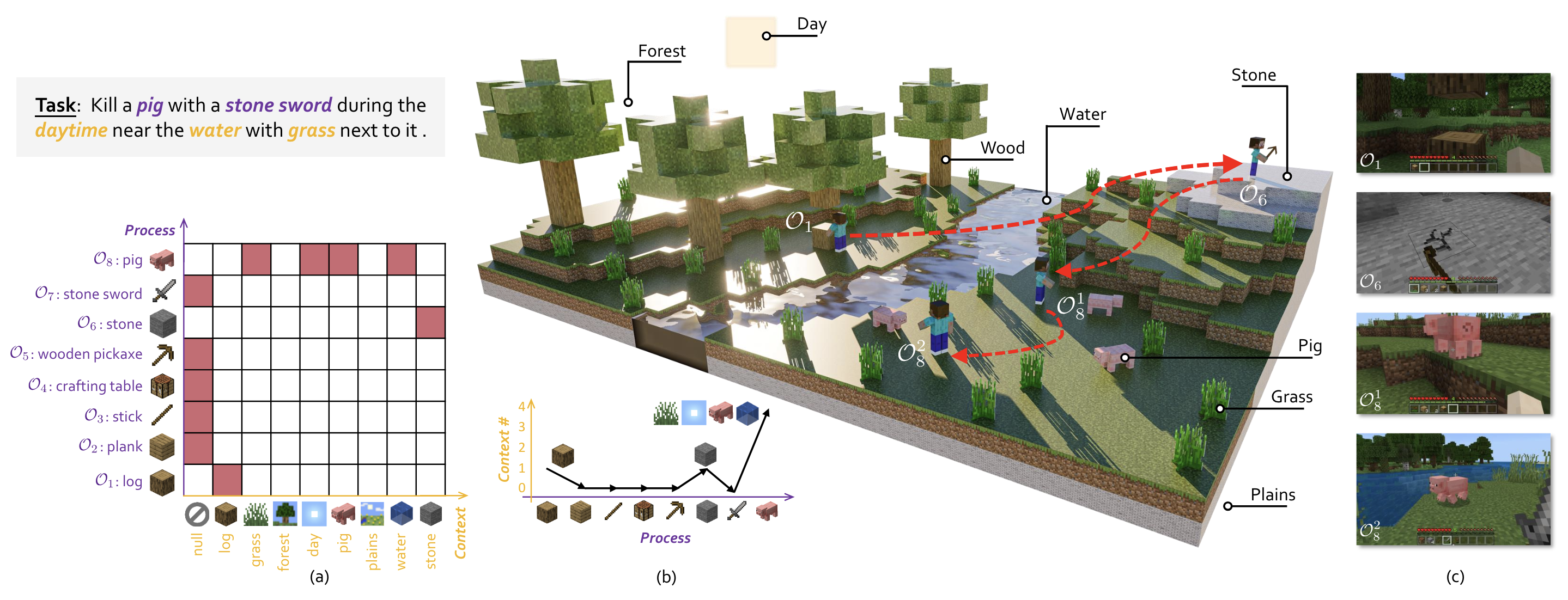

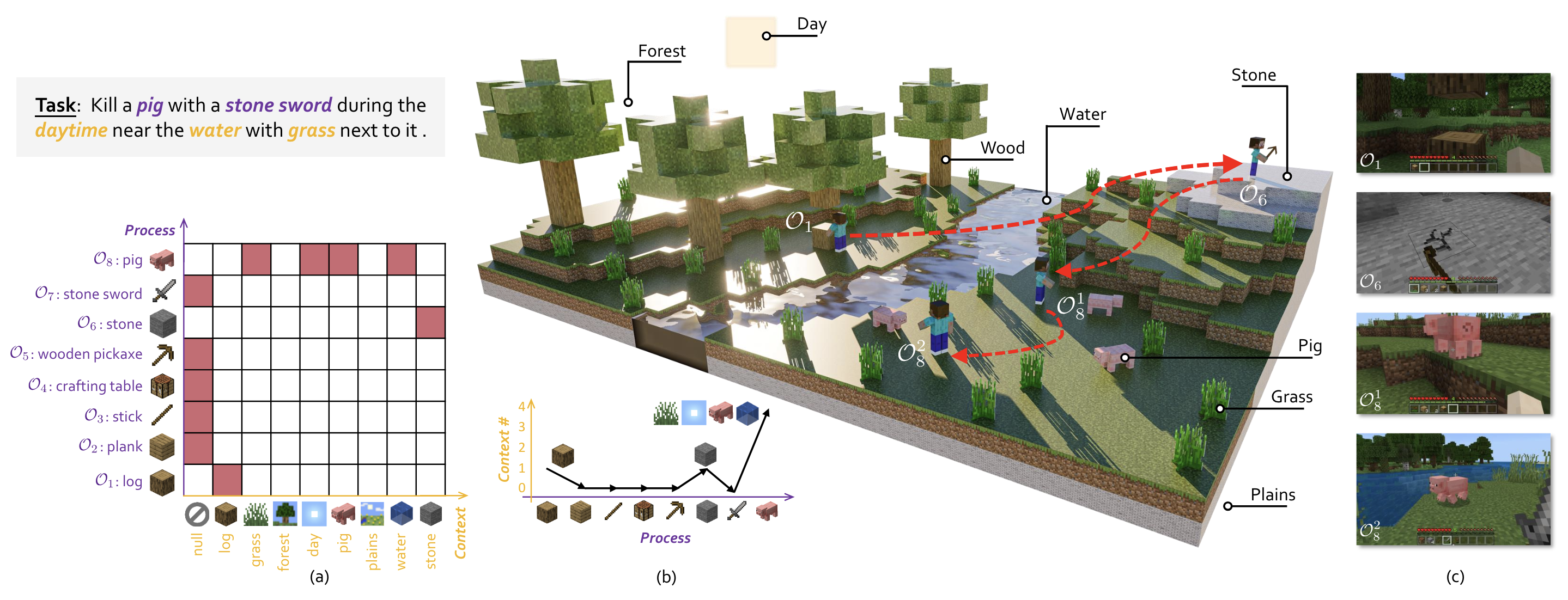

MP5: A Multi-modal Open-ended Embodied System in Minecraft via Active Perception

Yinran Qin *,

Enshen Zhou *,

Qichang Liu *,

Zhenfei Yin,

Lu Sheng†,

Ruimao Zhang†,

Yu Qiao,

Jing Shao‡

[Paper] /

[Project] /

[BiilBili Video] /

[Code] /

[BibTeX]

Copy Success!

TL;DR: Multi-Agent System can Solve Endless Open-ended Long-horizion tasks!

CVPR 2024

|

Services

Workshop Challenge Organizer:

- Trustworthy Multi-modal Foundation Models and AI Agents (TiFA) in ICML 2024.

- Multi-modal Foundation Model meets Embodied AI (MFM-EAI) in ICML 2024.

Reviewer: CVPR 2026, ECCV 2026, ICML 2026, AAAI 2026

|

|